Python

Status: This page is work in progress...

Last changed: Saturday 2015-01-10 18:32 UTC

Abstract:

Python is a high-level programming language first released by Guido van Rossum in 1991. Python is designed around a philosophy which emphasizes readability and the importance of programmer effort over computer effort. Python core syntax and semantics are minimalist, while the standard library is large and comprehensive. Python is a multi-paradigm programming language (primarily functional, object oriented and imperative) which has a fully dynamic type system and uses automatic memory management -- it is thus similar to Perl, Ruby, Scheme, and Tcl. The language has an open, community-based development model managed by the non-profit Python Software Foundation. While various parts of the language have formal specifications and standards, the language as a whole is not formally specified. The de facto standard for the language is the so-called CPython implementation. Some of the largest projects that use Python are the Zope application server, the Mnet distributed file store, YouTube, and the original BitTorrent client. Large organizations that make use of Python include Google and NASA. Air Canada's reservation management system is written in Python. Python has also seen extensive use in the information security industry -- it is commonly used in exploit development. Also, Python has been successfully embedded in a number of software products as a scripting language. For many OSs (Operating Systems), Python is a standard component -- it ships with most Linux distributions, with FreeBSD, NetBSD, and OpenBSD, and with Mac OS X. From a developers point of view, Python has a large standard library, commonly cited as one of Python's greatest strengths, providing tools suited to many disparate tasks. This comes from a so-called "batteries included" philosophy for Python modules. The modules of the standard library can be augmented with custom modules written in either C or Python. Recently, Boost C++ Libraries includes a library, python, to enable interoperability between C++ and Python. Because of the wide variety of tools provided by the standard library combined with the ability to use a lower-level language such as C and C++, which is already capable of interfacing between other libraries, Python can be a powerful glue language between languages and tools. This page is going to cover various aspects of Python and programming in Python as seen from a developers point of view. Last but not least, note that this page is about Python 3 where applicable and only refers to Python 2 where still necessary at the point of writing.

|

Table of Contents

|

Python, it just fits your brain...

— unknown

Introduction

This sections features as a general get to know Python section in that

it touches on the most profound theoretical and practical subjects.

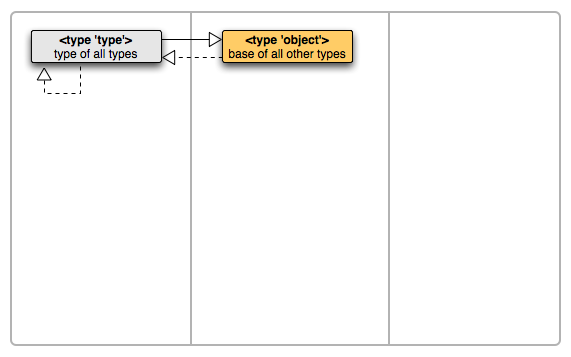

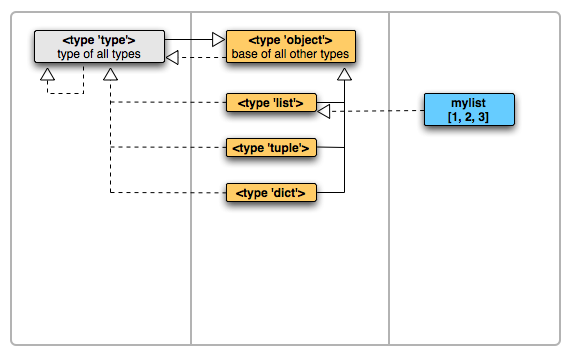

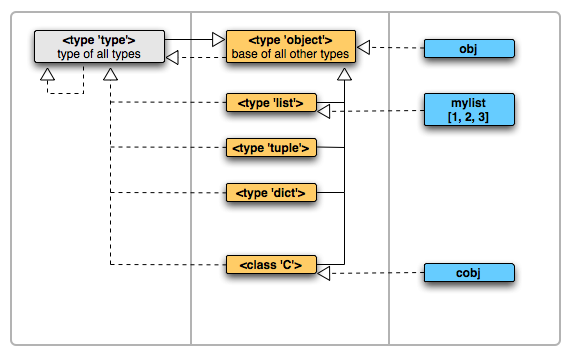

First thing to remember about Python is

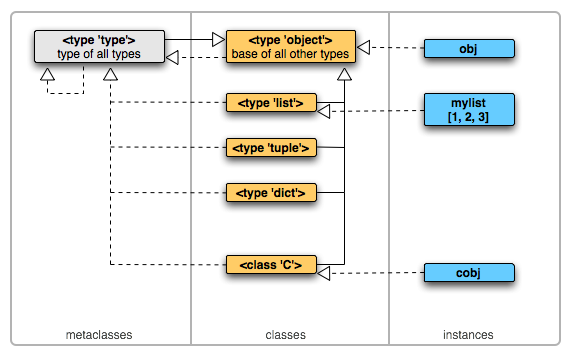

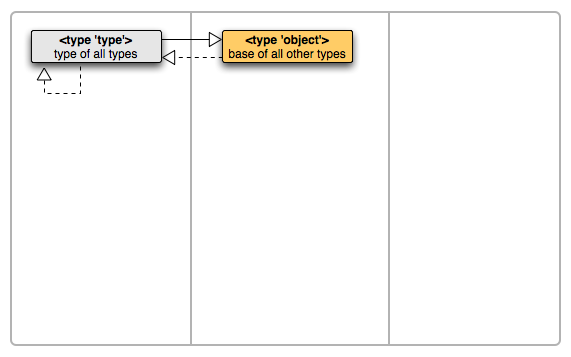

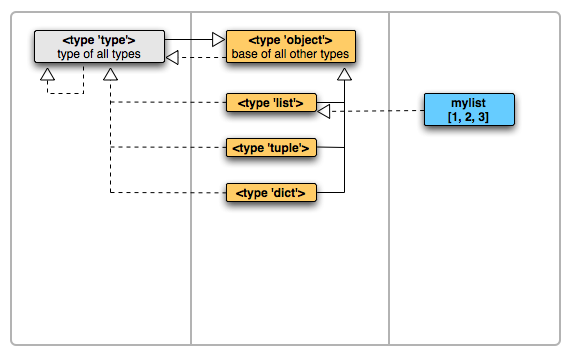

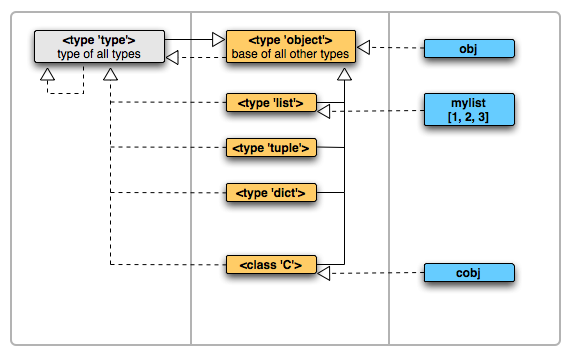

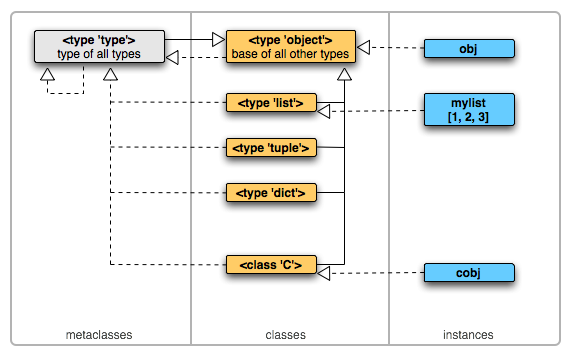

... in Python everything is an object!

-

Strings are objects. Lists are objects. Functions are objects. Classes

are objects. Class instances are objects. Properties are objects.

Modules are objects. Files are objects. Network connections are

objects. Descriptors are objects.... this list goes on and on...

Second most important thing with regards to Python is that

Iterators are everywhere, underlying everything, always just

out of sight.

Both, iterators and objects are explained in detail further down...

Main Usage Areas

So what is it that most people use Python for? Well, there are two

main usage areas:

- Web Applications and

- System Administration and Automation

There are many others too like for example scientific computing or

robotics but those are areas which have a considerably smaller

userbase than the two major areas mentioned above.

Why Python?

Python where we can, C++ where we must... As with many things in

live, simplicity is key. Even more so if by gaining simplicity we do

not have to cut back on features but maybe even gain on both ends.

Wow! Guess what, that thing exists and it goes by the name Python:

Python where we can, C++ where we must — they used (a subset of) C++

for the parts of the software stack where very low latency and/or

tight control of memory were crucial, and Python, allowing more rapid

delivery and maintenance of programs, for other parts.

If you are asking yourself Who are they? in this context, the answer

is: The founders of Google. So, why might someone make the decision

for this technology stack? Easy:

If I can write 10 lines of code in language X to accomplish what took you

100 lines of code in language Y, then my language is more powerful.

or in other words

>>> if succinctness == power:

... print("You are using Python.")

...

...

You are using Python.

>>>

Again you see, simplicity is good, simplicity scales, simplicity

shortens product cycles, simplicity helps reduce time to market and

last but not least, simplicity is more fun — heck, I would rather

spend a few hours and write some useful piece of software rather than

debugging some arcane memory bug in an even more arcane programming

language. Been there, done that...

Everything should be made as simple as possible, but not simpler.

— Albert Einstein

With the following subsections we are going to look at why/what that

often mentioned simplicity might be. I know you want facts, and

rightfully so!

Philosophy

It is important for anyone involved with Python to at least understand

a few basic/core ideas about the language itself:

- Python is FLOSS (Free/Libre Open Source Software) i.e. it is

developed by many rather than one individual or company. There are

no license fees that need to be paid for using it, there is no

risk of a vendor lock-in i.e. developing in Python gives

investment security.

- Python is a high-level programming language i.e. it is a

programming language with strong abstraction from the details of

the computer. Any high-level programming languages generally hides

the details of CPU operations such as memory access models and

management of scope. In comparison to low-level programming

languages, Python has more natural human language elements and its

code is portable across many hardware platforms and operating

systems.

- From a programming paradigm point of view, Python is a

multi-paradigm programming language. A multi-paradigm programming

language is a programming language that supports more than one

programming paradigm e.g. object oriented, functional, aspect

oriented, etc. The basic idea of a multiparadigm programming

language is to provide a framework in which programmers can work

in a variety of styles, freely intermixing constructs from

different paradigms. The design goal of such languages is to allow

programmers to use the best tool for a job, admitting that no one

paradigm solves all problems in the easiest or most efficient way.

- Python is known for its well thought out and easily readable

syntax (e.g. indentation) which in turn boosts productivity and

also makes it a great language for beginners.

- Python is a dynamic language with an dynamic type system. However,

despite having a dynamic type system, Python is strongly typed.

- The automatic memory management of Python is based on its dynamic

type system and a combination of reference counting and

garbage collection.

- Python is fully Unicode aware. There is also excellent support for

internationalization and localization.

- From the very beginning, the overall design concept of Python has

been: Keep the core language to a minimum and provide a large

standard library and means to easily extend the core with own code

and/or third party code.

- Python's philosophy rejects the thinking of there is more than one

way to do it approach to language design in favor of there should

be one (and preferably only one) obvious way to do it.

- As we know, premature optimization is the root of all evil.

Therefore, when speed is a problem, Python programmers tend to

try to optimize bottlenecks by algorithm improvements or data

structure changes, using a JIT (just-in-time compilation)

compiler such as Psyco, rewriting the time-critical functions in

closer to the metal languages such as C, or by translating Python

code to C code using tools like Cython.

Random Stuff

There are quite a few random things eventually interesting...

History of Python

1991 - Dutch programmer Guido van Rossum travels to Argentina

for a mysterious operation. He returns with a large cranial

scar, invents Python, is declared Dictator for Life by legions

of followers, and announces to the world that "There Is Only One

Way to Do It." Poland becomes nervous.

Those who are looking for a serious answer, go use some search engine

;-]

Zen of Python

>>> import this

The Zen of Python, by Tim Peters

Beautiful is better than ugly.

Explicit is better than implicit.

Simple is better than complex.

Complex is better than complicated.

Flat is better than nested.

Sparse is better than dense.

Readability counts.

Special cases aren't special enough to break the rules.

Although practicality beats purity.

Errors should never pass silently.

Unless explicitly silenced.

In the face of ambiguity, refuse the temptation to guess.

There should be one -- and preferably only one -- obvious way to do it.

Although that way may not be obvious at first unless you're Dutch.

Now is better than never.

Although never is often better than *right* now.

If the implementation is hard to explain, it's a bad idea.

If the implementation is easy to explain, it may be a good idea.

Namespaces are one honking great idea -- let's do more of those!

>>>

Everything written in Python should adhere to those principles. Things

like frameworks which are written in Python might have

additional principles/conventions on top of the ones outlined above.

In addition, there are coding styles which we should adhere.

Cheat Sheet or RefCard

Yes, the Internet has plenty of resources on the matter. Here is one

of them.

Quickstart

This subsection is a summary of the semantics and the syntax found in

Python. It can be read and followed along on the command line in less

than an hour.

It is intended as a glance into Python for those who have not had

contact with Python yet, or, for those who want a quick refresh of the

cornerstones that makeup for most of Python's look and feel. Also,

without further notice, note that this page is about Python 3 where

applicable and only refers to Python 2 where still necessary at the

point of writing.

One of the things I like most about Python is that it is not as wordy

as Java/C++ and not as cryptic as Perl but just a programming language

with an pragmatic approach to software development — something I

would also love to see for JavaScript, which somehow has become my

second most used programming language right after Python and before

Java/C++. Enough said, let us now go and ride the snake a little...

Preparations

It is strongly recommended to follow along using Python's built-in

interactive shell. Personally I prefer to use bpython but then the

standard built-in shell is just fine.

One needs to install Python if not installed already e.g. using APT

(Advanced Packaging Tool) by issuing aptitude install python or

install it manually. After installing one should be able to find the

interpreter and start the interactive shell:

sa@wks:~$ which python

/usr/bin/python

sa@wks:~$ python3

Python 3.2 (r32:88445, Feb 20 2011, 19:50:20)

[GCC 4.4.5] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>>

As of now (November 2011) /usr/bin/python still links to Python 2 as

standard Python instead of Python 3 in which case we simply have to

specify python3 as I did above. Also, Python 3 needs to be installed

of course: aptitude install python3 — it is no problem to have both

Python versions installed at the same time. Before we actually start,

let us make sure we run Python 3. I always use bpython and just issue

python because I have some magic that fires up Python 3 and does some

initialization... anyhow, the only thing one needs to know is that

aptitude install python3 installs Python 3python3 starts the interactive shell on Python 3sysconfig.get_python_version() allows us to check which Python

version we are running, just to be sure

Ok, grab your helmets, fasten seat belts... turn ignition...

Basics

Assignment, values, names and the print() function:

>>> foo = 3 # bind (through assignment) name foo to value 3

>>> print(foo) # use print function on object foo

3

>>> bar = "hello world" # a string is a value too

>>> print(bar)

hello world

>>> fiz = foo # bind name fiz to the same value foo is bound to already

>>> print(fiz)

3

>>>

Expressions and statements:

>>> 3 + 2 # an expression is something

5

>>> print(3 + 2) # a statement does something

5

>>> len(bar) # another statement

11

>>>

Code blocks are set apart using whitespace rather than braces.

Conditionals, clauses and loops work as one would expect:

>>> for character in "abc":

... print(character) # 4 spaces per indentation level

...

...

a

b

c

>>> for character in "abc":

... print(character)

... if character in "a":

... print("found character 'a'") # 8 spaces on level 2

...

...

...

a

found character 'a'

b

c

>>> if 4 == 2 + 2:

... print("boolean context evaluated to true")

...

... else:

... print("boolean context evaluated to false")

...

...

boolean context evaluated to true

>>>

Mostly the semantics of while loops can be done more efficiently using

for loops and operators such as in. This is especially true in case we

need a counter in order iterate through a sequence — absent the fact

that when in need of a counter, the experienced Pythoneer would

probably turn to a closures anyway.

Following the link about counters also shows us the use of the range()

function, maybe one of the most used functions next to print() and a

few others. Also, by now it should be clear that lines beginning with

# or everything following a # is a comment, thus ignored by Python.

Data Structures

With only a few built-in Python data structures we can probably cover

80% of use cases. To get to 100% we can then use third party add-ons

(so-called packages and/or modules) written by others or simply build

our custom data structures and maybe also some custom algorithms which

we purposely designed to go with our custom data structures.

Relations amongst Data Structures

We have already seen some data structures — numbers and strings.

Numbers are so-called literals whereas strings are sequences.

Sequences itself are a subset of containers, which in turn is not just

the superset to sequences but also to mappings and sets.

What all data structures have in common is that each of them is either

itself an object or some sort of grouping thereof. Another thing that

is true for any data structure is that it is either mutable (can be

modified in place) or immutable (cannot be modified in place) — place

being location(s) in memory.

Below is a sketch picturing what we just said about how data

structures in Python relate (formatting does not carry any information

but was chosen to make things fit):

o b j e c t

/ \

/ \

literals c o n t a i n e r s

/ \ / | \

/ \ / | \

immutable mutable s e q u e n c e s m a p p i n g s s e t s

/ \ / \ / \ / \

/ \ / \ / \ / \

n u m b e r s etc. immutable mutable mutable immutable immutable mutable

/ | \ / | \ / | / / \

/ | \ / | \ / | / / \

integral complex real/float strings tuples etc. lists etc. dictionaries frozenset set

/ \ / \ / \

/ \ / \ / \

integer boolean decimal binary OrderedDict etc.

The sketch, even if it is not complete but only shows the root and a

few branches, is a good enough approximation and should help with

understanding how data structures in Python relate — they are

basically arranged in a tree structure, based on semantics they carry.

Numbers, Lists, Tuples, Dictionaries

Numbers/Integers

>>> 2

2

>>> type(2) # check for class/type

<class 'int'> # yes, 2 is indeed an integer

Numbers/Floats:

>>> 1.1 + 2.2

3.3000000000000003

>>> type(1.1)

<class 'float'>

Lists

>>> foo = [2, 4, "hello world"] # create a lists with three items

>>> type(foo)

<class 'list'>

>>> foo[0] # get item at index position 0

2

>>> foo[2]

'hello world'

>>> foo[2] = "hello big world" # assign to index position 2

>>> foo

[2, 4, 'hello big world'] # assignment worked because lists are mutable

>>> foo[1:] # get a slice

[4, 'hello big world']

>>> foo[:-1] # slice but with negative upper boundary

[2, 4]

>>> foo[-1:] # negative lower boundary

['hello big world']

Tuples

>>> bar = (2, 4, "hello world")

>>> type(bar)

<class 'tuple'>

>>> bar[0]

2

>>> bar[2]

'hello world'

>>> bar[2] = "hello big world"

Traceback (most recent call last): # because tuples are immutable

File "<input>", line 1, in <module>

TypeError: 'tuple' object does not support item assignment

>>> bar

(2, 4, 'hello world')

>>> bar[1:] # same as for lists

(4, 'hello world')

Dictionaries

- we are going to create a dictionary with three key/value pairs also

known as items

- keys need to be immutable; values can be both, immutable and mutable

- dictionaries are unordered i.e. the order of items is not

guaranteed (see how

foobar changes position from last to first)

>>> fiz = {'foo': 3, 'baz': "hello world", 'foobar': bar} # using bar from above

>>> type(fiz)

<class 'dict'>

>>> fiz

{'foobar': (2, 4, 'hello world'), 'baz': 'hello world', 'foo': 3}

>>> fiz['foo']

3

>>> fiz['foobar']

(2, 4, 'hello world')

>>> fiz['baz'] = "hello big world"

>>> fiz['baz']

'hello big world'

>>> fiz

{'foobar': (2, 4, 'hello world'), 'baz': 'hello big world', 'foo': 3}

>>> fiz['foobar'][1] # nested

4

>>>

Built-in Goodies

So far we have only scratched the subject of built-in data structures

in Python. We already know that each data structure is in fact an

object. This object holds some sort of data e.g. a list has items.

It is not far of to think that it would be nice if those objects also

had ways to operate on the data they store e.g. reverse the items of

a list etc. Guess what, that is exactly the case — every data

structure comes with ready-made functionality to operate on the data

it stores! Let us just have a quick look at a few:

>>> foo

[2, 4, 'hello big world']

>>> foo.reverse() # works because lists are mutable

>>> foo

['hello big world', 4, 2]

>>> bar.count(4) # number occurrences of value 4 in tuple bar

1

>>> bar.index(2) # index position of value 2

0

>>> fiz.items()

dict_items([('foobar', (2, 4, 'hello world')), ('foo', 3), ('baz', 'hello big world')])

>>> fiz.keys()

dict_keys(['foobar', 'foo', 'baz'])

>>> fiz.values()

dict_values([(2, 4, 'hello world'), 3, 'hello big world'])

>>>

By the way, those built-in goodies are actually method objects linked

to from each data structure instance but let us not get ahead of

ourselves for now...

Functions

The next step is to combine all we have seen so far and group behavior

(code) in order to more efficiently manage state (data). Functions are

basically just code blocks (set apart by indentation) which we can

refer to by name and which body contains statements and expression

which semantically belong together.

However, grouping is just one thing that is nice about using

functions. Being able to refer to code blocks by name is nice too.

However, where the real benefit comes into play is when we start

reusing those code blocks in order to avoid code duplication.

>>> def amplify(foo): # function signature; foo is its only parameter

... print(foo * 3) # function body

...

...

>>> amplify('hi') # works for strings

hihihi

>>> amplify(2) # and numbers...

6

>>> type(amplify)

<class 'function'>

>>> bar = amplify # now bar and amplify, both are bound to

>>> type(bar)

<class 'function'>

>>> bar is amplify # the same function object

True

>>> bar

<function amplify at 0x24f3490> # 0x24f3490 address of function object in memory

>>> amplify

<function amplify at 0x24f3490>

>>> bar(2)

6

>>>

The most important thing to understand about functions is that they

are objects too, just like strings etc. What that means is that we can

assign them to arbitrary names — like we just did when we bound the

name bar (through assignment) to the function object located at memory

address 0x24f3490. Again, that is two (or more) names bound to one

object!

It is also important to understand that functions can take arguments,

something quite important when we want to reuse code where the

processing (read code/logic) stays the same but of course input data

varies (e.g. can be hi or 2 or whatever, as shown above).

At this point there is no need to concern ourselves with the concepts

of scope and namespaces, as we will see more on them later. The last

thing which is certainly necessary to know with regards to functions

is that they always return something.

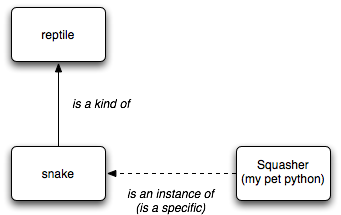

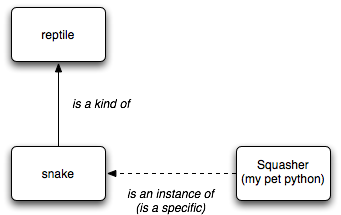

Custom Data Structures

Next to many built-in data structures we can create our custom ones —

the terms class/type, superclass/supertype, subclass/subtype, instance

and OOP (Object-Oriented Programming), all hint that we are dealing

with custom data structures. I advice people to maybe go visit each of

those links, maybe read the first one or two paragraphs and then come

back for a light introduction into custom data structures in Python:

1 >>> class Foo: # creating a class/type

2 ... pass

3 ...

4 ...

5 >>> type(Foo)

6 <class 'type'>

7 >>> class Bar(Foo): # subclassing

8 ... def __init__(self, firstname=None, surname=None): # a (special) method

9 ... self.firstname = firstname or None

10 ... self.surname = surname or None

11 ...

12 ... def print_name(self): # another method

13 ... print("I am {} {}.".format(self.firstname, self.surname))

14 ...

15 ...

16 ...

17 >>> type(Bar)

18 <class 'type'>

19 >>> Bar.__bases__

20 (<class '__main__.Foo'>,)

21 >>> aperson = Bar(firstname="Niki", surname="Miller") # instantiating

22 >>> isinstance(aperson, Bar)

23 True

24 >>> isinstance(aperson, Foo)

25 True

26 >>> aperson.print_name() # method call

27 I am Niki Miller.

28 >>>

In only 28 lines we have shown about 90% there is to know about custom

data structures in Python. In line 1, we use the class keyword to

create a new class/type — let me thrown in a referrer to

naming conventions now for the first time. We then use pass in the

class body because, actually, we do not want to use Foo for anything

other than subclassing Bar from it in line 7.

With Bar we actually implement a few things — why not create a custom

data structure used to store basic information related to a person,

such as his/her name. Yes, let us do that!

Since a class/type is basically a blueprint used to create instances

from, every instance (individual person in our case) will have a

different name of course. We want to store a persons name

automatically right after creating the instance i.e. when it gets

initialized — that is what the __init__() special method does,

initialising a fresh-out-of-the-oven instance.

We can put whatever we want into the body of __init__(), such as for

example grabbing the information about a persons name provided to us

when an instance is created. We then store the name on the instance

(lines 9 and 10).

That is what self is used for... referencing the instance in question

i.e. either accessing already stored information (line 13) or storing

information (lines 9 and 10) on an instance of some class/type.

Also, note the use of or which in our current case means that only if

we provide e.g. firstname when instantiating (line 21), is it stored

on the instance (self.firstname). If we do not provide it, None is

automatically stored (or whatever follows or in lines 9 and 10; not to

be confused with None from the function signature in line 8, that is

the default parameter value, which just happens to be None as well).

We already know the built-in type() function from above, we use it to

find out to which class/type a certain name is bound to. In our case

it is type type, twice, lines 6 and 18. Let us not worry about type

for now. What is more interesting and of more practical use is a

closer look at the concept of inheritance since we subclassed Bar from

Foo — no special reason really, just to showcase how subclassing is

done i.e. we could have put lines 8 to 13 instead of lines 2 and be

fine without ever creating Bar.

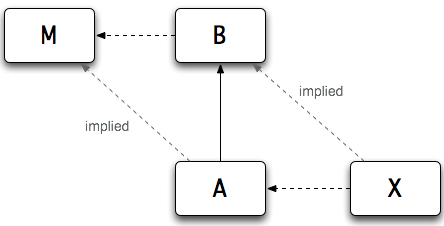

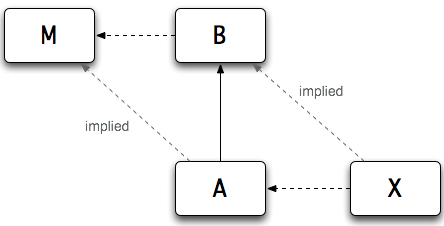

Line 22 and 23 show how we can check whether or not aperson really is

an instance of Bar. And guess what, since Bar is a subclass of Foo (or

looked at it the other way around, Foo a superclass/supertype to Bar)

aperson is of course also a instance of Foo (lines 24 and 25).

print_name is a method (the other name for functions when defined

inside a class/type) which means that aside from the fact that it has

the implicit self argument, it really is a function under the hood,

the stuff we already looked at above. Line 26 shows how we call a

method which now lives on (or actually is referenced from) the aperson

instance. () is the call operator.

Last but not least, a word on inheritance... The nifty thing from

line 19 and 20 is using a so-called class/type attribute to see if Bar

in fact has a superclass/supertype other than the built-in Python one

i.e. if it is the topmost not Python built-in class/type within the

inheritance chain or not. Turns out it is not because it is subclassed

from Foo.

Standard Library

It is a must for any Pythoneer to know about the

Python standard library, what it contains, as well as

how and when to use it. It has lots of useful code, highly optimized,

for many problems seen by most people over and over again for all

kinds of problem domains across many industries. Using bits and pieces

form the standard library always starts with importing code which can

then be used right away. Let us look at some examples:

>>> import math

>>> math.pi

3.141592653589793

>>> math.cos((math.pi)/3)

0.5000000000000001

>>> from datetime import date

>>> today = date.today()

>>> dateofbirth = date(1901, 11, 11)

>>> age = today - dateofbirth

>>> age.days

39993

>>> from datetime import datetime

>>> datetime.now()

datetime.datetime(2011, 5, 11, 22, 52, 20, 42708)

>>> foo = datetime.now()

>>> foo.isoformat()

'2011-05-11T22:52:26.306873'

>>> foo.hour

22

>>> foo.isocalendar()

(2011, 19, 3)

>>> import zlib

>>> foo = b"loooooooooooooong string..."

>>> len(foo)

28

>>> compressedfoo = zlib.compress(foo)

>>> len(compressedfoo)

23

>>> zlib.decompress(compressedfoo)

b'loooooooooooooong string...'

>>> import random

>>> foo = [3, 6, 7]

>>> random.shuffle(foo)

>>> foo

[3, 7, 6]

>>> random.choice(['gym', 'no gym'])

'gym'

>>> random.randrange(10)

3

>>> random.randrange(10)

7

>>> import glob

>>> glob.glob('*txt')

['file.txt', 'myfile.txt']

>>> import os

>>> os.getcwd()

'/tmp'

>>> os.environ['HOME']

'/home/sa'

>>> os.environ['PATH'].split(":")

['/usr/local/bin',

'/usr/bin',

'/bin',

'/usr/local/games',

'/usr/games',

'/home/sa/0/bash',

'/home/sa/0/bash/photo_utilities']

>>> os.uname()

('Linux',

'wks',

'2.6.38-2-amd64',

'#1 SMP Thu Apr 7 04:28:07 UTC 2011',

'x86_64')

>>> import platform

>>> platform.architecture()

('64bit', 'ELF')

>>> platform.python_compiler()

'GCC 4.4.5'

>>> platform.python_implementation()

'CPython'

>>> from urllib.request import urlopen

>>> bar = urlopen('')

>>> bar.getheaders()

[('Connection', 'close'),

('Date', 'Wed, 11 May 2011 22:08:15 GMT'),

('Server', 'Cherokee/1.0.8 (Debian GNU/Linux)'),

('ETag', '4dc82a11=6364'),

('Last-Modified', 'Mon, 09 May 2011 21:53:21 GMT'),

('Content-Type', 'text/html'),

('Content-Length', '25444')]

>>> import timeit

>>> foobar = timeit.Timer("math.sqrt(999)", "import math")

>>> foobar.timeit()

0.18407893180847168

>>> foobar.repeat(3, 100)

[2.7894973754882812e-05, 2.3126602172851562e-05, 2.288818359375e-05]

>>> import sys

>>> sys.path

['',

'/usr/local/bin',

'/usr/lib/python3.2',

'/usr/lib/python3.2/plat-linux2',

'/usr/lib/python3.2/lib-dynload',

'/usr/local/lib/python3.2/dist-packages',

'/usr/lib/python3/dist-packages']

>>> import keyword

>>> keyword.iskeyword("as")

True

>>> keyword.iskeyword("def")

True

>>> keyword.iskeyword("class")

True

>>> keyword.iskeyword("foo")

False

>>> import json

>>> print(json.dumps({'foo': {'name': "MongoDB", 'type': "document store"},

... 'bar': {'name': "neo4j", 'type': "graph store"}},

... sort_keys=True, indent=4))

{

"bar": {

"name": "neo4j",

"type": "graph store"

},

"foo": {

"name": "MongoDB",

"type": "document store"

}

}

>>>

... and that was not even 0.1% of what is available from the Python

standard library!

Scripts

Most people, before they write applications composed of several

files/libraries (i.e. modules and/or packages), probably start out

writing themselves simple scripts in order to automate things such as

system administration tasks.

That is actually the perfect way into Python after working through

some quickstart section such as this one because, in order to create

and execute scripts, one needs to know about the pound bang line,

import, docstrings, how to use Python's standard library, as well as

what it means to write pythonic code.

On-disk Location

Python can live anywhere on the filesystem and there are quite a few

ways to influence and determine where things go...

PYTHONPATH Variable

Well, actually we are not talking about PYTHONPATH alone here but

instead we take a look at the bigger picture i.e. how does Python

find/know about code that exists on the filesystem so we can make use

of it? To answer this question, let us take a look at Python's

module search behavior and how we can influence it.

Finding Code on the Filesystem

If we have code (Python package or modules) somewhere on the

filesystem that we want Python to know about, we need to import that

code using the import statement. For import to work, Python needs to

know where to find the code on the filesystem. What a no brainer eh?

;-]

So how do we tell Python about the places where it should look for

code? The variable sys.path holds a bunch of paths also known as

module search paths. Python searches those directories for code so we

can start using it by importing it. In order for Python to find our

code on the filesystem, we have two choices:

- We put our code into one directory that is already part of

sys.path or

- We add another directory to

sys.path

Before we start, let us take a look at sys.path as it looks like in

its default setup:

sa@wks:~$ python

>>> dir()

['__builtins__', '__doc__', '__name__', '__package__']

>>> import pprint

>>> import sys

>>> pprint.pprint(sys.path)

['',

'/usr/lib/python3.2',

'/usr/lib/python3.2/plat-linux2',

'/usr/lib/python3.2/lib-dynload',

'/usr/lib/python3.2/dist-packages',

'/usr/local/lib/python3.2/dist-packages']

If we decided to add our own or some third party code without adding a

new directory to sys.path, then /usr/local/lib/python3.2/dist-packages

would be the right place to put it. However, this might not work for

the following reasons:

- we do not want to clutter the default directories with our

own/third party code

- we might not have permissions to do so e.g. no root permissions

- we simply want to keep our code somewhere else on the filesystem

If we want/have to add another directory to sys.path, then there are

two possibilities:

- we do it manually every time we start the Python interpreter or

- we automate the process so that maybe even Python code itself

could take care of it

Manually adding to sys.path

This one is straight forward as we only need to append to sys.path:

>>> import os

>>> sys.path.append('/tmp')

>>> sys.path.append(os.path.expanduser('~/0/django'))

>>> pprint.pprint(sys.path)

['',

'/usr/lib/python3.2',

'/usr/lib/python3.2/plat-linux2',

'/usr/lib/python3.2/lib-dynload',

'/usr/lib/python3.2/dist-packages',

'/usr/local/lib/python3.2/dist-packages',

'/tmp',

'/home/sa/0/django']

>>>

Adding directories manually is quick and certainly nice while doing

development/testing but it is not what we want for some permanent

setup like for example a long-term development project or a production

site. For those, we want to add directories to sys.path automatically

which is shown below.

Automatically adding to sys.path

When a module named duck is imported, the interpreter searches for a

file named duck.py in the current working directory, and then in the

list of directories specified by the environment variable PYTHONPATH

— this environment variable has the same syntax as the shell variable

PATH, that is, a list of directory names separated by colons.

sa@wks:~$ echo $PATH

/usr/local/bin:/usr/bin:/bin:/usr/games:/home/sa/0/bash

sa@wks:~$

When PYTHONPATH is not set, or when duck.py is not found in the

current working directory, the search continues in an

installation-dependent default path.

Most Linux distributions include Python as a standard part of the

system, so prefix and exec-prefix are usually both /usr on Linux. If

we build Python ourselves on Linux (or any Unix-like system), the

default prefix and exec-prefix are /usr/local.

sa@wks:~$ python

>>> import sys

>>> sys.prefix

'/usr'

>>>

sa@wks:~$

So now we know how finding code on the filesystem works. This however

does not help us much since we do not want to use any of the default

paths/directories listed in sys.path. We also do not want to manually

add directories to sys.path every time the Python interpreter gets

restarted.

Although the standard method so far is to add directories to

PYTHONPATH, this is suboptimal for two reasons:

- it is only valid for one particular system user (e.g. for

production sites) respectively a normal Unix/Linux user account if

we are writing code

- using

PYTHONPATH is not really portable since everywhere we want to

run our code, we need to adapt PYTHONPATH

So what do we do? Piece of cake, we use .pth files. Those files are

simple text files containing paths to be added to sys.path, one path

per line.

All we need to do is to put our .pth files into one of the directories

the site module knows about — without further explanation, one of

those directories is /usr/local/lib/python<version>/dist-packages e.g.

/usr/local/lib/python3.1/dist-packages if we are using Python version

3.1. The way it works is really easy:

1 sa@wks:/tmp$ mkdir test; cd test; echo -e "foo\nbar" > our_path_file.pth

2 sa@wks:/tmp/test$ mkdir foo bar

3 sa@wks:/tmp/test$ echo 'print("inside foo.py")' > foo/foo.py

4 sa@wks:/tmp/test$ echo 'print("inside bar.py")' > bar/bar.py

5 sa@wks:/tmp/test$ type ta

6 ta is aliased to `tree -a -I \.git*\|*\.\~*\|*\.pyc'

7 sa@wks:/tmp/test$ ta ../test/

8 ../test/

9 |-- bar

10 | `-- bar.py

11 |-- foo

12 | `-- foo.py

13 `-- our_path_file.pth

14

15 2 directories, 3 files

16 sa@wks:/tmp/test$ cat our_path_file.pth

17 foo

18 bar

19 sa@wks:/tmp/test$ python3

20 Python 3.1.1+ (r311:74480, Oct 12 2009, 05:40:55)

21 [GCC 4.3.4] on linux2

22 Type "help", "copyright", "credits" or "license" for more information.

23 >>> import pprint, sys, site

24 >>> pprint.pprint(sys.path)

25 ['',

26 '/usr/lib/python3.1',

27 '/usr/lib/python3.1/plat-linux2',

28 '/usr/lib/python3.1/lib-dynload',

29 '/usr/lib/python3.1/dist-packages',

30 '/usr/local/lib/python3.1/dist-packages']

31 >>> site.addsitedir('/tmp/test')

32 >>> pprint.pprint(sys.path)

33 ['',

34 '/usr/lib/python3.1',

35 '/usr/lib/python3.1/plat-linux2',

36 '/usr/lib/python3.1/lib-dynload',

37 '/usr/lib/python3.1/dist-packages',

38 '/usr/local/lib/python3.1/dist-packages',

39 '/tmp/test',

40 '/tmp/test/foo',

41 '/tmp/test/bar']

42 >>> import foo

43 inside foo.py

44 >>> import foo

45 >>> import bar

46 inside bar.py

Python now finds our modules foo.py and bar.py thanks to

our_path_file.pth. Note that what we did in lines 3 and 4 are in place

only to show that importing works as we see with lines 42 to 46 —

modules are not meant to do things (e.g. print text) when they are

imported. Note also, that importing a module more than once does not

execute the code inside again (lines 42 to 44).

site.addsitedir from line 31 is quite a nifty thing — it adds a

directory to sys.path and processes its .pth file(s). That it worked

can be seen from lines 39 to 41.

Certainly, no one really cares to use /tmp for serious

development/deployment work if /tmp is set up the usual way

(everything in /tmp will vanish on reboot).

What I often do is to add to sys.path so that it is only added for one

particular Python version and only for my user account sa.

47 >>> site.USER_SITE

48 '/home/sa/.local/lib/python3.1/site-packages'

49 >>> import os

50 >>> dir()

51 ['__builtins__', '__doc__', '__name__', '__package__', '__warningregistry__', 'bar', 'foo', 'os', 'pprint', 'site', 'sys']

52 >>> mypth = os.path.join(site.USER_SITE, 'mypath.pth')

53 >>> print(mypth)

54 /home/sa/.local/lib/python3.1/site-packages/mypath.pth

55 >>> module_paths_to_add_to_sys_path = ["/home/sa/0/django", "/home/sa/0/bash"]

56 >>> if not os.path.isdir(site.USER_SITE):

57 ... os.makedirs(site.USER_SITE)

58 ...

59 >>> with open(mypth, "a") as f:

60 ... f.write("\n".join(module_paths_to_add_to_sys_path))

61 ... f.write("\n")

62 ...

63 33

64 1

65 >>> pprint.pprint(sys.path)

66 ['',

67 '/usr/lib/python3.1',

68 '/usr/lib/python3.1/plat-linux2',

69 '/usr/lib/python3.1/lib-dynload',

70 '/usr/lib/python3.1/dist-packages',

71 '/usr/local/lib/python3.1/dist-packages',

72 '/tmp/test',

73 '/tmp/test/foo',

74 '/tmp/test/bar']

75 >>> site.addsitedir(site.USER_SITE)

76 >>> pprint.pprint(sys.path)

77 ['',

78 '/usr/lib/python3.1',

79 '/usr/lib/python3.1/plat-linux2',

80 '/usr/lib/python3.1/lib-dynload',

81 '/usr/lib/python3.1/dist-packages',

82 '/usr/local/lib/python3.1/dist-packages',

83 '/tmp/test',

84 '/tmp/test/foo',

85 '/tmp/test/bar',

86 '/home/sa/.local/lib/python3.1/site-packages',

87 '/home/sa/0/django',

88 '/home/sa/0/bash']

89 >>>

90 sa@wks:/tmp/test$

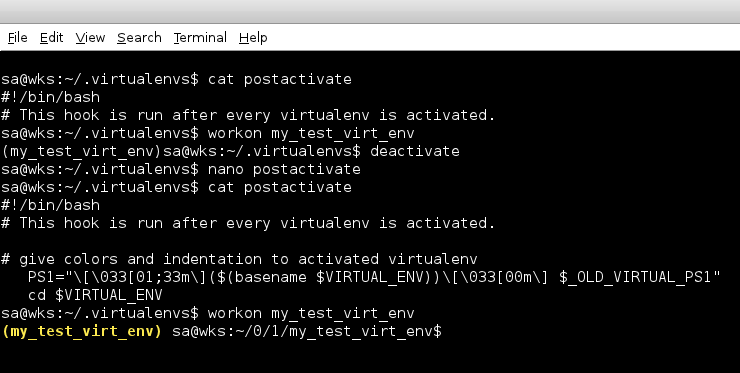

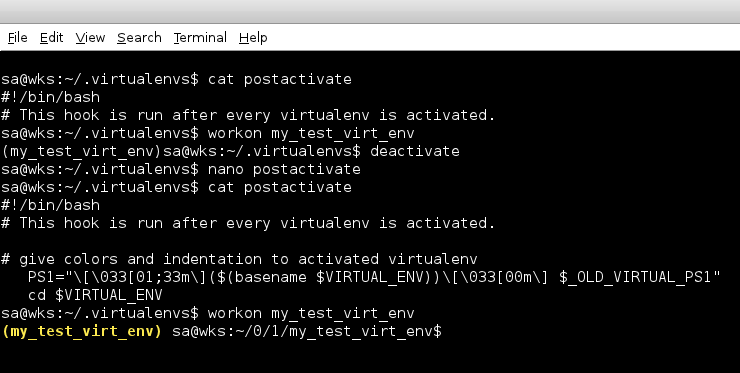

Virtual Environment

A standard system has what is called a main Python installation also

known as global Python context/space i.e. a Python interpreter living

at /usr/bin/python and a bunch of modules/packages installed into the

module search paths.

Another way to have modules/packages installed would be to use

virtualenv. It can be used to create isolated Python contexts/spaces

i.e. those virtual environments can have their own Python interpreter

as well as their own set of modules/packages installed and therefore

have no connection with the global Python context/space whatsoever.

Note that we can not just clone the global Python context/space or

create an entirely separated Python context/space to work with, but we

can also link any directories into any virtual environment. This means

ultimate flexibility without risking to damage the existing main

Python installation also known as global Python context/space.

Configuration Information

The sysconfig module provides access to Python's configuration

information like the list of installation paths and the configuration

variables relevant for the current platform.

Since Python 3.2 we can issue python -m sysconfig on the command line

which will give us detailed information about our setup:

sa@wks:~$ python -m sysconfig

Platform: "linux-x86_64"

Python version: "3.2"

Current installation scheme: "posix_prefix"

Paths:

data = "/usr"

include = "/usr/include/python3.2mu"

platinclude = "/usr/include/python3.2mu"

platlib = "/usr/lib/python3.2/site-packages"

platstdlib = "/usr/lib/python3.2"

purelib = "/usr/lib/python3.2/site-packages"

scripts = "/usr/bin"

stdlib = "/usr/lib/python3.2"

Variables:

ABIFLAGS = "mu"

AC_APPLE_UNIVERSAL_BUILD = "0"

[skipping a lot of lines...]

py_version = "3.2"

py_version_nodot = "32"

py_version_short = "3.2"

srcdir = "/home"

userbase = "/home/sa/.local"

sa@wks:~$

Interpreted

Python is an interpreted language, as opposed to a compiled one,

though the distinction can be blurry because of the presence bytecode.

This means that Python source code files (.py) can be run directly

without explicitly creating an executable which is then run.

Interpreted languages typically have a shorter development/debug cycle

than compiled ones, though their programs generally also run more

slowly, often by several orders of magnitude.

In the end we always need to decide on a per case basis — there is no

one fits all possible use cases programming language out there...

Interpreters

There are several implementations...

WRITEME

CPython

PyPy

Unladen Swallow

Stackless Python

Bytecode

Python source code (.py) is compiled into bytecode (.pyc), the

internal representation of a Python program with the CPython

interpreter.

Bytecode is also cached in .pyc and .pyo (optimized code) files so

that executing the same piece of source code is way faster from the

second time onwards (recompilation from source to bytecode can be

avoided).

This intermediate language is said to run on a virtual machine that

executes the machine code corresponding to each bytecode e.g. CPython,

Jython, etc. That said, bytecodes are not expected to work on

different Python virtual machines nor can we expect them to work

across Python releases on the same virtual machine.

Also, note that .pyc files contain a magic number, just like every

other executable file on Unix-like operating systems.

Garbage Collection

Not the thing your neighbours are talking about when referring to your

car but rather the automatic memory management of Python which is

based on its dynamic type system and a combination of

reference counting and garbage collection.

In a nutshell: once the last reference to an object is removed, the

object is deallocated... that is, left floating around in memory

until deleted/overwritten. The memory it occupied is said to be freed

and possibly immediately reused by another (new) object. Python's

memory management is smart enough to detect and break cyclic

references between objects that might otherwise occupy memory

indefinitely, which in its worst case might cause memory shortage.

Reference Count

The number of references to an object. When the reference count of an

object drops to zero, it is deallocated. Reference counting is

generally not visible to Python code, but it is a key element of the

CPython implementation.

The sys module defines a getrefcount() function that can be called

from Python code in order to return the reference count for a

particular object.

Pieces of the Puzzle

In a way it is like doing a puzzle... small bits and pieces are used

to assemble bigger ones, which are used to assemble even bigger ones

which in turn make for a nice whole... Let us have a look at various

kinds of blocks and how they fit together:

Working Set

A collection of distributions available for importing. These are the

distributions that are on the sys.path variable. At all times there

can only be one version of a distribution in a working set.

Working sets include all distributions available for importing, not

just the sub-set of distributions which have actually been imported

using the import statement.

Standard Library

Python's standard library is very extensive, offering a wide range of

facilities. The library contains built-in modules (written in C) that

provide access to system functionality such as file I/O that would

otherwise be inaccessible to Python programmers, as well as modules

written in Python that provide standardized solutions for many

problems that occur in everyday programming.

Some of these modules are explicitly designed to encourage and enhance

the portability of Python programs by abstracting away

platform-specifics into platform-neutral APIs.

Pro

The argument for having a standard library, aside from the afore

mentioned is that it also helps with what is known as the selection

problem.

This is the problem of picking a third party module (sometimes even

finding it, although PyPI helps with that) and figuring out if it is

any good. Simply figuring out the quality of a module is a lot of

work, and the amount of work multiplies drastically if there are

several third party modules that seem to cater to the same problem

domain at hand.

Often, the only way we can really tell if a package/module is going to

work well is to actually try using it. Generally this has to be done

in a real program under real use case circumstances which means that

if we picked poorly we may have wasted time and effort. Even if we can

rule out a piece of code relatively early, we had to spend time to

read documentation and skim over code.

And frankly speaking, it is frustrating to run into near misses i.e.

packages/modules that almost do what we need and almost work but in

the end have some edge cases unsolved. Faced with this, it often at

least feels easier to write something from scratch ourselves if what

we want is not too much work anyway.

When a module has made it into the standard library, we do not have to

go through all of this (mostly true) as we can just use the

package/module/class/function/etc., secure in the confidence that this

is a good implementation of whatever problem we need to solve.

Someone else has already gone through all of the quality assurance

process, and if there were multiple implementations, somebody has

probably either picked the best one or at least determined that they

are more or less equivalent and so we are not missing anything very

important by not looking at the other options.

Contra

However, there are more and more voices saying that the standard

library has become to big and should be cut down or set aside from

Python core (the interpreter) release cycles altogether (releasing

more often than core).

The argument is that once code is included into the standard library,

it stifles innovation on that particular area (because it is tied to

release cycles of Python core and must maintain full backwards

compatibility) and discourages other developers from innovating in

that same area.

Module, Package

We can think of modules as extensions/add-on/plugins that can be

imported into Python to extend its capabilities beyond the core i.e.

the interpreter itself.

A module is usually just a file on the filesystem, containing source

code (statements, functions, classes, etc.) for a particular use case

e.g. draw.py might be a module to draw things like circles. A package

can be thought of as a directory containing one or more modules

(files) or other packages i.e. we could have a package called graphics

that would contain the modules draw.py and colorize.py

sa@sub:/tmp$ mkdir graphics; touch graphics/{draw,colorize}.py; ta graphics

graphics

|-- colorize.py

`-- draw.py

0 directories, 2 files

sa@sub:/tmp$ type ta

ta is aliased to `tree --charset ascii -a -I \.git*\|*\.\~*\|*\.pyc'

sa@sub:/tmp$

There are two ways how modules and/or packages are distributed:

- The standard library is a collection of modules and packages which

ships with almost any Python core installation. Using those

packages or modules is easy. All we have to is import them e.g.

import module or from module import function and so forth — we do

not need to explicitly install them onto our system.

- Third-party modules/packages are distributed through PyPI or other

means. Importing works the same, what is different however is that

we do need to get those packages and/or modules onto our system

first. This is straight forward in case we find what we need on

PyPI and if we use tools like PIP for example. In case we do not

use PyPI and/or PIP, more manual labour might be involved to get

packages/modules installed before we can import it.

When importing, it has become good practice to import in the following

order, one import per line:

- built-in also known as standard library modules e.g.

sys, os, etc.

- third-party modules (anything installed in Python's site-packages

directory) e.g.

fabric, jinja2, supervisor, django-mongodb-engine,

pymongo, gunicorn, etc.

- our own modules

One good example for a Python package can be found in Django where

every project and the applications it contains is/are in fact Python

packages.

Get a List of available Modules

Use help('modules'). Another, probably more pythonic way is to look at

sys.modules which is a dictionary containing all the modules that have

ever been imported since Python was started. The key is the module

name, the value is the module object. We only look at the first four

keys for demonstration purposes:

>>> sys.modules.keys()[:4]

['pygments.styles', 'code', 'opcode', 'distutils']

>>>

Modules create Namespaces

Modules play an important role in Python since they create namespaces

when being imported.

Organize Modules

It is recommended to organize modules in a particular way.

__main__

When we run a Python script then the interpreter treats it like any

other module i.e. it gets its own global namespace. There is one

difference however: the interpreter assigns __main__ to its __name__

special attribute rather than its actual module name, same thing that

happens to __name__ within an interactive interpreter session. We

often see things like this:

>>> if __name__ == '__main__':

... print("We are either using the interpreter interactively or we just executed a script.")

...

...

We are either using the interpreter interactively or we just executed a script.

>>> __name__

'__main__'

>>>

What it does is change semantics based on whether or not we run the

.py script/file/module from the command line or whether we import the

script/file/module from another module.

Extension Module

This is software written in the same low-level language the particular

Python implementation is written in e.g. C/C++ for CPython or Java for

Jython.

The extension module is typically contained in a single dynamically

loadable and pre-compiled file e.g. a .so (shared object file) on

Unix-like operating systems like Linux, a .dll on Windows or a Java

class file in case of Jython.

built-in Modules

sys.builtin_module_names returns a tuple with all the module names

which are built-in with the interpreter:

>>> import sys

>>> from pprint import pprint as pp

>>> pp(sys.builtin_module_names)

('__main__',

'_ast',

'_bisect',

'_codecs',

'_collections',

'_ctypes',

'_elementtree',

'_functools',

'_hashlib',

'_heapq',

'_io',

'_locale',

'_pickle',

'_random',

'_socket',

'_sre',

'_ssl',

'_struct',

'_symtable',

'_thread',

'_warnings',

'_weakref',

'array',

'atexit',

'binascii',

'builtins', # contains built-in functions, exceptions, and other objects

'cmath',

[skipping a lot of lines...]

'zipimport',

'zlib')

>>>

Note that the builtins module is one of the built-in modules with the

Python interpreter.

__builtin__, builtins

We have built-in functions like abs(). Those live in a module called

builtins (__builtin__ in Python 2) which creates its own namespace:

>>> import __builtin__

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ImportError: No module named __builtin__

>>> import sys; sys.version[:3]

'3.3'

>>> import builtins, pprint

>>> builtins.__doc__.splitlines()[0]

'Built-in functions, exceptions, and other objects.'

>>> pprint.pprint(list(builtins.__dict__.items())[::15])

[('bytearray', <class 'bytearray'>),

('oct', <built-in function oct>),

('bytes', <class 'bytes'>),

('ImportWarning', <class 'ImportWarning'>),

('filter', <class 'filter'>),

('open', <built-in function open>),

('hasattr', <built-in function hasattr>),

('id', <built-in function id>),

('ZeroDivisionError', <class 'ZeroDivisionError'>)]

>>>

As can be seen, the builtins module contains what its __doc__

attribute says... objects, exceptions and functions. Last but not

least, note that the builtins module is itself a built-in module with

the Python interpreter i.e. we are talking about a built-in module

(builtins) which contains all the built-in functions, exceptions and a

bunch of different objects which we can access/use without importing

anything because it is all baked into the interpreter already.

__builtins__

As an implementation detail, most modules have the name __builtins__

(note the plural name and the underscores) made available as part of

their globals. The value of __builtins__ is usually either this module

or the value of this modules's __dict__ attribute. Since this is an

implementation detail, it may not be used by alternate implementations

of Python.

__future__

Basically what the name promises, it is a module that brings future

features which are not enabled with the current version of Python core

(the interpreter) by default. Simply using import __future__ will

enable such features. Go here for more information.

Finder, Loader, Importer

To use functionality which is not built-in with Python core i.e. the

interpreter itself, we need to get it from somewhere else e.g. the

Python Standard Library or some module/package. This is called

importing — basically everything that involves the import statement.

This process of importing is, as many other things, specified by a

so-called protocol. The Importer protocol involves two objects: a

finder and a loader.

- The finder object returns a loader object if the module was found,

or

None otherwise. A finder object defines a find_module() object.

- The loader object returns the loaded module or raises an exception,

preferably

ImportError if an existing exception is not being

propagated. A loader object defines a load_module() method.

In many cases the finder and loader are one and the same object i.e.

find_module() would just return self. The combined functionality of

the finder and loader object is called importer. See PEP 302 for more

information.

How to Import

The way we import modules effects the way we use namespaces quite a

bit. Go here for more information on the matter.

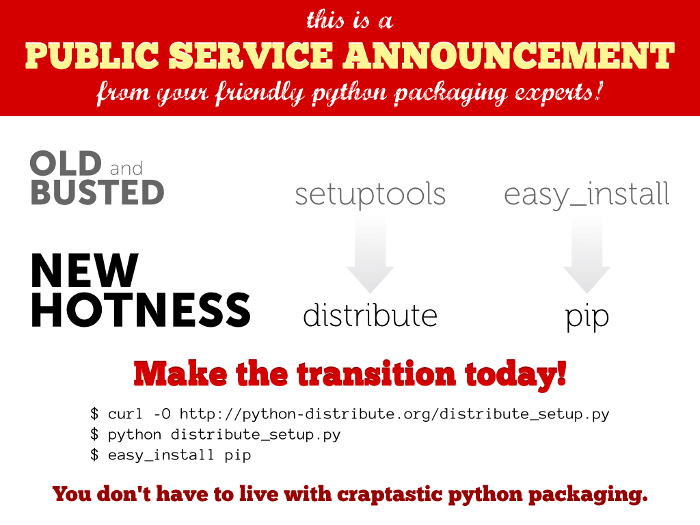

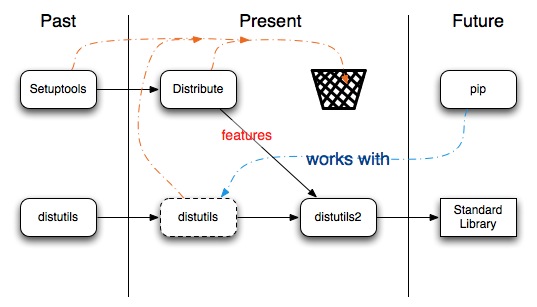

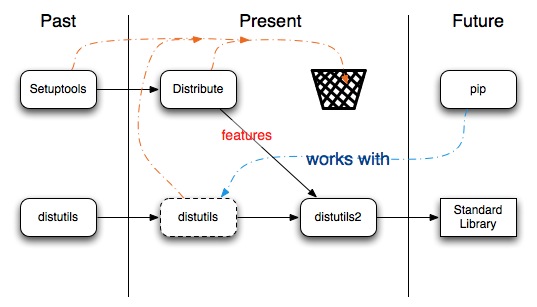

Distutils, Setuptools, Distribute

Although those tools have nothing to do with writing source code

itself, they are needed to work with the whole Python ecosystem. Go

here and here for more information.

PyPI

The PyPI (Python Package Index) is the default packaging index for the

Python community (same CPAN is for Perl). Package managers such as

EasyInstall, zc.buildout, PIP and PyPM use PyPI as the default source

for packages and their dependencies. PyPI is open to all Python

developers to consume and distribute their distributions.

Distribution

Not to be confused with Linux distributions e.g. Debian, Suse, Ubuntu,

etc. A Python distribution is a versioned and compressed archive file

(e.g. pip-0.9.tar.gz) which contains Python packages, modules, and

other resource files. The distribution file is what an end-user will

download from the Internet and install.

A distribution is often mistakenly called a package — this is the

term commonly used in other fields of computing. For example Debian

calls these files package files (.deb). However, in Python, the term

package refers to an importable directory. In order to distinguish

between these two concepts, the compressed archive file containing

code is called a distribution.

Project

A library, framework, script, plugin, application, or collection of

data or other resources, or any combination thereof.

Python projects must have unique CamelCase names, which are registered

on PyPI. Each project will then contain one or more releases, and each

release may comprise one or more distributions.

There is a strong convention to name a project after the name of the

package which is imported to run that project e.g. FlyingDingo becomes

the project name when ../flyingdingo is the package name that gets

imported in order to run the project.

Example

A Python project consists at least of two files living side by side in

the same directory — a setup.py file which describes the metadata of

the project, and a Python module containing Python source code to

implement the functionality of the project. However, usually the

minimal layout of a project contains a little more than just a

setup.py and a module.

It is wise to create a full Python package i.e. a directory with an

__init__.py file, called ../flyingdingo. Doing so is good practice as

it anticipates future growth as the project's source code is likely to

grow beyond a single module.

Next to the Python package a project should also have a README.txt

file describing the project, a AUTHORS.txt providing basic information

about the programmer(s) such as contact information and there should

also be a LICENSE.txt file containing the software license and maybe

also information related to intellectual property matters.

The result will then look like this on the filesystem — it all starts

with the CamelCase project directory FlyingDingo at the root:

sa@wks:~$ type ta; ta FlyingDingo/

ta is aliased to `tree --charset ascii -a -I \.git*\|*\.\~*\|*\.pyc'

FlyingDingo/

|-- flyingdingo

| `-- __init__.py

|-- AUTHORS.txt

|-- LICENSE.txt

|-- README.txt

`-- setup.py

1 directory, 4 files

sa@wks:~$

Note that ta from above is just an alias in my ~/.bashrc.

Release

A snapshot of a project at a particular point in time, denoted by a

version identifier.

Making a release may entail the publishing of multiple distributions

as we might release for several platforms. For example, if version 1.0

of a project was released, it could be available in both a source

distribution format and a Windows installer distribution format.

Files

There are lots of files used for various things:

setup.py

Update: note that setup.py is going to be deprecated with the

introduction of packaging which will use setup.cfg — in other words:

setup.cfg is the new setup.py.

setup.py is Python's answer to a multi-platform installer and

make file. In other words: setup.py in combination with either

distutils/setuptools/distribute can be thought off the equivalent to

make && make install — it translates to python setup.py build &&

python setup.py install.

Some packages are pure Python and are only byte-compiled, other

packages may also contain native C code which will require a native

compiler like gcc or cl and some Python interfacing module like swig

or pyrex.

Generally setup.py can be thought of being at the core of

packaging/distributing/installing Python software.

setup.cfg

It is a configuration file local to some package which is used to

record configuration data for a particular package. setup.cfg is the

last one of three layers where Python looks for configuration

information.

At first it looks at the system-wide configuration file e.g.

/usr/lib/python<version>/distutils/distutils.cfg, next it looks at our

personal settings e.g. ~/.pydistutils.cfg and lastly it looks local to

a package i.e. setup.cfg.

Any of those levels overrides the former one e.g. personal overrides

system-wide, package-local overrides personal and of course,

package-local also overrides system-wide.

Note that with the introduction of packaging into the standard library

in Python 3.3, setup.cfg replaces setup.py.

__init__.py

Files named __init__.py are used to mark directories on disk as

so-called Python packages — basically a sort of meta-module

containing other modules.

Every time we use import, Python goes off and looks for the stuff we

actually want to import (e.g. a package, a module, a class, a

function, etc.) in the module search path know to it at the time. More

information on the matter can be found here.

Next to signifying that a directory is a Python package, __init__.py

files can also be used to carry out initialization as all code in that

file is executed the first time we import the package, or any module

from the package. However, the vast majority of __init__.py files are

empty simply because most packages do not need to initialize anything.

An example in which we may want initialization is when we want to read

in a bunch of data once at package-load time (e.g. from files, a

database, the web...), in which case it is much nicer to put that

reading in a private function in the package's __init__.py rather than

have a separate initialization module and redundantly import that

module from every single real module in the package.

By using __init__.py for this use case we can elegantly avoid the

repetitive and error-prone task but rather rely on the language's

guarantee that the package's __init__.py is loaded once before any

module in the package, which is obviously much more pythonic.

Last but not least, if we want to specify the public API for a package

then we put __all__ inside __init__.py which carries the same

semantics as putting __all__ inside a module to specify the modules

public API.

models.py

All we just said about __init__.py is true for models.py with Django

as well — it can be used and behaves exactly like __init__.py, same

semantics.

site.py

site.py is run when our interpreter starts. It loads a few things from

the builtins module, and adds some paths to the module search paths

like per-user paths and the like.

Keywords

As every other programming language out there, Python has keywords

too:

>>> import keyword

>>> from pprint import pprint as pp

>>> pp(keyword.kwlist)

['False',

'None',

'True',

'and',

'as',

'assert',

'break',

'class',

'continue',

'def',

'del',

'elif',

'else',

'except',

'finally',

'for',

'from',

'global',

'if',

'import',

'in',

'is',

'lambda',

'nonlocal',

'not',

'or',

'pass',

'raise',

'return',

'try',

'while',

'with',

'yield']

>>>

Coding Style

Python coding style and guidelines have PEP 8 and the Zen of Python at

their core. In addition, there are docstrings which are also part of

adhering to good coding style in Python. However, there is more than

just PEP 8 and PEP 257.

Why have a Coding Style?

As project size increases, the importance of consistency increases

too. Most projects start with isolated tasks, but will quickly

integrate the pieces into shared libraries as they mature. Testing and

a consistent coding style are critical to having trusted code to

integrate and can be considered main pillars of quality assurance.

Also, guesses about naming and interfaces will be correct more often

than not which can greatly enhance developer experience and

productivity.

Good code is useful to have around for several reasons: Code written

to these standards should be useful for teaching purposes, and also to

show potential employers during interviews. Most people are reluctant

to show code samples — but then having good code that we have written

and tested will put us well ahead of the crowd. Also, reusable

components make it much easier to change requirements, refactor code

and perform analyses and benchmarks.

With good coding standards in the end everybody wins: Developers

because there will be less bugs and guessing which means there will be

more time to innovate and do bleeding-edge stuff which is a lot more

fun compared to hunting down and fixing bugs all the time. Marketing

will be happy because TtM (Time to Market) will be reduced, and new

features delivered faster. Management will be happy because RoI

(Return on Investment) will go up and at the same time administrative

costs will go down. Last but not least, users will appreciate the fact

that there will be less bugs and more new features more often.

EAFP

EAFP (Easier to ask for Forgiveness than Permission) is a

programming principle how to approach problems/things when

programming. This clean and fast style is characterized by the

presence of many try and except statements.

It is nothing Python specific but can actually be found with many

programming languages. With Python however, because of it is nature,

adhering to this principle works quite well. In Python EAFP is

generally preferred over LBYL (Look before you Leap), which is the

contrary principle and, for example, the predominant coding style with

C.

Pound Bang Line, Shebang Line

Executable scripts on Unix-like systems may have something like

#!/bin/sh, #!/usr/bin/env, #!/usr/bin/perl -w or #!/usr/bin/python

show in their first line. The program loader takes the presence of #!

as an indication that the file is an executable script, and tries to

execute that script using the interpreter specified by the rest of

line.

File Permissions

File permissions are set depending on umask which is why we usually

end up with file permissions of 644:

sa@wks:~$ umask

0022

sa@wks:~$ touch foo.py

sa@wks:~$ ls -l foo.py

-rw-r--r-- 1 sa sa 0 Apr 20 18:56 foo.py

sa@wks:~$

Now, in order to execute foo.py it needs to have at least read

permissions in case we want to run it like this

sa@wks:~$ echo 'print("Hello World")' > foo.py; cat foo.py

print("Hello World")

sa@wks:~$ python foo.py # using the interpreter directly

Hello World

sa@wks:~$

but it needs to be executable plus have its pound bang line in case we

would want it to run like this

sa@wks:~$ echo -e '#!/usr/bin/env python\nprint("Hello World")' > foo.py; cat foo.py

#!/usr/bin/env python

print("Hello World")

sa@wks:~$ ls -l foo.py; ./foo.py

-rw-r--r-- 1 sa sa 43 Apr 20 18:59 foo.py

bash: ./foo.py: Permission denied

sa@wks:~$ chmod 755 foo.py

sa@wks:~$ ls -l foo.py

-rwxr-xr-x 1 sa sa 43 Apr 20 18:59 foo.py

sa@wks:~$ ./foo.py # no ./ needed if current dir is in PATH

Hello World

sa@wks:~$

Underscore, Gettext

A single underscore (_) should only be used in conjunction with

gettext. Go here for more information.

for loop

Sometimes people use loop variables such as _ which is not a good idea

as outlined below. It is recommended to use things like each or i. Do

this

>>> for each in range(2):

... print(each)

...

...

0

1

>>>

or this

>>> for i in range(2):

... print(i)

...

...

0

1

>>>

but not this

>>> for _ in range(2):

... print(_)

...

...

0

1

>>>

Using _ as often suggested is a bad idea because it collides with the

gettext marker i.e. as soon as we start internationalizing our code,

we will have to refactor any non-gettext use of _. _ is useful in

interactive interpreter sessions only.

Single Quotes vs Double Quotes

There are 4 ways we can quote strings in Python:

"string"'string'"""string"""'''string'''

Semantically there is no difference in Python i.e. we can use either.

The triple string delimiters """ and ''' are mostly used to simplify

multi-line docstrings. There are also the raw string literals r"..."

and r'...' to inhibit \ escapes.

>>> print('This is a string using a single quote!')

This is a string using a single quote!

>>> print("This is a string using a double quote!")

This is a string using a double quote!

>>> print("""Using tiple quotes

... we can do

... multiline strings.""")

Using tiple quotes

we can do

multiline strings.

>>>

This example, shows that single quotes (') and double quotes (") are

interchangeable. However, when we want to work with a contraction,

such as don't, or if we want to quote someone quoting something then

this is what happens:

>>> print("She said, "Don't do it")

File "<stdin>", line 1

print("She said, "Don't do it")

^

SyntaxError: invalid syntax

>>>

What happened? We thought double and single quotes are

interchangeable. Well, truth is, they are for the most part but not

always. When we try to mix them, it can often end up in a syntax

error, meaning that our code has been entered incorrectly, and Python

does not know what we are trying to accomplish.

What really happens is that Python sees our first double quote and

interprets that as the beginning of our string. When it encounters the

double quote before the word Don't, it sees it as the end of the

string. Therefore, the letters on after the second double quote make

no sense to Python, because they are not part of the string. The

string does not begin again until we get to the single quote before

the letter t. However, there is a trivial solution to this problem,

known as backslash (\) escape:

>>> print("She said, \"Don't do it\"")

She said, "Don't do it"

>>>

Finally, let us take a moment to discuss the triple quote. We briefly

saw its usage earlier. In that example, we saw that the triple quote

allows we to write some text on multiple lines, without being

processed until we close it with another triple quote. This technique

is useful if we have a large amount of data that we do not wish to

print on one line, or if we want to create line breaks within our

code as shown below:

>>> print("""I said

... foo, he said

... bar and baz is

... what happened.""")

I said

foo, he said

bar and baz is

what happened.

>>>

There is another way to print text on multiple lines using the newline

(\n) escape character, which is the most common of all the escape

characters:

>>> print("I said\nfoo, he said\nbar and baz is\nwhat happened.")

I said

foo, he said

bar and baz is

what happened.

>>>

Note that we did not have to use triple quotes in this case! Last but

not least, look what a simple r can do:

>>> print(r'I said\nfoo, he said\nbar and baz is\nwhat happened.')

I said\nfoo, he said\nbar and baz is\nwhat happened.

>>>

In this case r'...' determines a raw string literal which can be used

to inhibit the effect of backslash (\) escapes.

Recommendation

- single quotes for small symbol-like strings, but which will break

the rules if the strings contain quotes (lines 11 to 15), or if we

forget to add them (lines 7 to 10).

- double quotes around strings which are used for interpolation (line

2) or which are natural language messages, and

- triple double quotes for docstrings and raw string literals for

regular expressions, even if they are not needed (e.g. considered

best practises for Django URLconfs).

1 >>> anumber = 2

2 >>> "there are {} cats on the roof".format(anumber)

3 'there are 2 cats on the roof'

4 >>> CONSTANTS = {'keyfoo': "some string", 'keybar': "another string"}

5 >>> print(CONSTANTS['keyfoo'])

6 some string

7 >>> CONSTANTS[keyfoo]

8 Traceback (most recent call last):

9 File "<stdin>", line 1, in <module>

10 NameError: name 'keyfoo' is not defined

11 >>> CONSTANTS = {'keyfoo's number': "some string", 'keybar': "another string"}

12 File "<stdin>", line 1

13 CONSTANTS = {'keyfoo's number': "some string", 'keybar': "another string"}

14 ^

15 SyntaxError: invalid syntax

16 >>>

Docstrings and raw string literals (r'...') for regular expressions:

def some_function(foo, bar):

"""Return a foo-appropriate string reporting the bar count."""

return somecontainer['bar']

re.search(r'(?i)(arr|avast|yohoho)!', message) is not None

Docstring

A string literal which appears as the first expression in a class,

method, function or module. While ignored when the suite is executed,